-

Redefining personalized treatment selection in complex mental health conditions with award-winning, advanced predictive AI models

-

finalist

Mission

Depression affects around 1 in 8 people globally during their lifetime. Rates of depression and anxiety significantly spiked during the COVID-19 pandemic, with studies showing a 25% global increase in these conditions. As of 2023, depression still remains a leading cause of disability worldwide, with approximately 280 million adults affected each year globally, including over 19 million adults in the U.S.

Aifred addresses a trial-and-error approach to medication selection that hasn’t changed in decades. Nearly 70% of patients don’t find relief after their first treatment, 30% still won’t by their fourth.

Aifred's mission is to elevate the standard of care, building responsible, evidence-driven AI platforms that enable providers to offer personalized and effective treatment plans. With a focus on scientific rigor, we support enhanced clinical decision-making and significant improvement in patient outcomes.

Real-world, clinically validated and regulatory approved AI technology for treatment response prediction

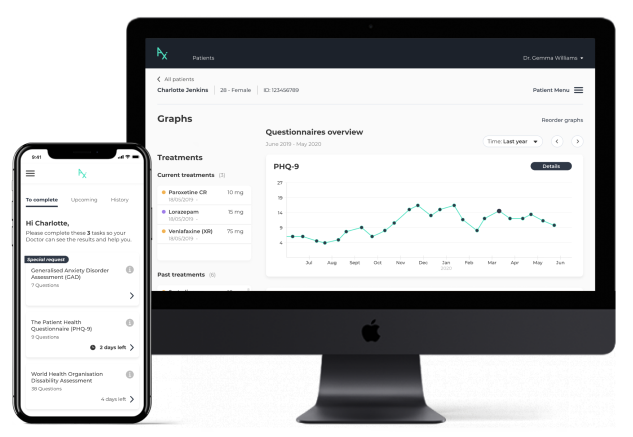

Aifred's AI model for treatment response prediction makes personalized medicine a reality, generating predictions in real time, without the need for expensive and time-consuming tests: predicting probabilities of remission against available medications, based on the unique characteristics of each individual patient.

Even the best technology can’t change the world unless people use it. In our real-world studies, 92% of patients found our tool easy to use, and 86% of doctors found the AI model helpful in making treatment decisions.

Our AI model in major depression offers predictions for 10 first-line treatments and combinations, the largest number of treatments supported by any single model to date. Drawing on data from over 9,000 clinical trial participants, it was designed with flexibility and clinical utility at the forefront. Through 100 independent simulations, we observed a statistically significant improvement of remission rates by 27.5% from baseline (results recently published in NPJ Mental Health Research). Our platform was approved by Health Canada in 2024, with FDA decision pending, and has multiple patents approved and pending in the US and worldwide.

Patient outcomes proven in leading centres of excellence across North America

The patient <> physician clinical decision support tool is accessible on any device, supporting both telehealth and in-person appointments. A patient enters behavioural health and limited socio-demographic information, which is first processed by a clinical algorithm based on best-evidence guidelines. Outputs help the physician understand: where the patient is on the disease cycle, monitor the patient over time, and determine ‘what to do next’ at each stage, e.g. adding psychotherapy or making adjustments to medication. If an adjustment to, or first-time selection of, medication is required, the AI model provides evidence-based information supporting the clinician in optimizing their selection.

Aifred's North American clinical trial was the first of its kind in the world for an AI-based platform in major depression and conducted with sites at leading centres of excellence both in the US and Canada, including the US Dept. of Veterans Affairs.

Focused on patients with moderate and severe depression, use of the platform demonstrated significant impact on patient outcomes:

-

- Significantly improved rate of remission

-

- Significantly accelerated speed of improvement in symptoms

-

- No impact on treatment adherence

-

- Strong patient and clinician engagement

-

Full results have been peer reviewed and published and can be found here

-

Views from the experts

-

In January 2020, XPRIZE interviewed doctors and leaders working with Aifred. Hear what they had to say.

A company born out of need, innovation, and the XPRIZE

Founded by four McGill university students, who having witnessed the struggles of friends, family members and patients, became passionate about finding a solution. Together, they decide to explore how AI could be applied to improving mental healthcare around the world. On hearing of the XPRIZE, they entered in late 2016; even incorporating the following year as a condition of continuing in the competition - and Aifred Health was born.

The Aifred team has grown a lot since then! Today, we’re fortunate to have the support of a world-class Scientific Advisory Board and valued international collaborators.